This is an excerpt from the book series Philosophy for Heroes: Act.

How does the brain perceive its environment?

To grasp the brain’s underlying functionality, it is best to look at how information flows through individual parts of the brain. While we have already discussed some aspects of the visual cortex, let us revisit it in more detail to see how different systems in the brain interact.

Lobe A lobe is an anatomical division or extension of an organ.

Occipital lobe The occipital lobe is part of the neocortex and contains the visual cortex which is responsible for processing visual sense data.

Cerebral hemispheres The cerebral hemispheres consist of the occipital lobe, the temporal lobe, the parietal lobe, and the frontal lobe. The two hemispheres are joined by the corpus callosum.

Figure 5.10 shows the architecture of the visual system.

- Right visual field: Light from the right visual field hits the left sides of the retinas of each eye.

- Left visual field: Light from the left visual field hits the right sides of the retinas of each eye.

- Left retina sides: Sense data from both left retina sides are communicated through the optic chiasm and combined in the visual cortex of the left cerebral hemisphere of the occipital lobe.

- Right retina sides: Sense data from both right retina sides are combined in the visual cortex of the right cerebral hemisphere of the occipital lobe.

.jpg)

Ultimately, information from the right visual field is processed in the left visual cortex, and information from the left visual field is processed in the right visual cortex. Between the optic chiasm and the visual cortex, the signal passes through the left and right lateral geniculate nucleus. There, the sense data is pre-processed and transferred to various brain parts, with the visual cortex the most important one. Pre-processing means that the brain analyzes the images and modifies them for further processing by other brain parts.

There are a number of possible reasons evolution led to this rather convoluted architecture where the optic nerves of both eyes cross in the optic chiasm and split the information from the eyes’ left and right visual fields. If the left eye were directly connected with the left visual cortex and the right eye directly connected to the right visual cortex, losing one eye would mean that one visual cortex would either no longer receive any input, or it would receive the input too late because it first had to be transferred from the other hemisphere. The crossing in the optic chiasm enables the visual system to work even in the case when only one eye functions properly.

Color Perception

To understand vision, we first need to understand color and light. Light rays are actually electromagnetic waves. Depending on the length of the waves, we experience them as different colors on the color spectrum (so-called spectral colors, see Figure 5.11). To perceive colors, our eyes have photoreceptor cells sensitive to red, green, and blue light (see Figure 5.12). In addition, shorter wavelengths activate both the red and blue photoreceptor cells, making them look purple.

.jpg)

.jpg)

Now, imagine throwing a stone into a lake. Depending on the size of the stone, water waves of different size will emerge. A large stone will lead to long water wavelengths, a small stone will lead to short water wavelengths. If you threw multiple stones into the water, there would still be water waves, but they would overlap with each other. Just like no single stone can create such an overlapping of water waves, no single light source can create all the colors we can perceive. This is why beyond the colors on the electromagnetic spectrum, we also experience extra-spectral colors like white, gray, black, pink, or brown when different photoreceptor cells are activated in combination. Those colors could be compared with multiple stones thrown into the water. For example, a pink flower reflects red light waves, but also reflects green and blue light waves. Similarly, white, gray, and black are the product of different wavelengths at the same intensity, and brown is simply orange at low light intensity.

That extra-spectral colors are a combination of different wavelengths of light was not discovered until Newton’s famous prism experiment in 1666. Before that, it was thought that prisms produce colors, but it was Isaac Newton who showed that a prism merely splits light into its spectrum. By adding a second prism, Newton proved that the red light from the first prism produced only red light in the second prism, and that he could recombine different colors of light back into white light (see Figure 5.14). This experiment became a symbol for the Scientific Revolution because it replaced a subjective understanding of light with objective, observable facts. The existence of extra-spectral colors show that our subjective experience of the world is pre-processed. The LGN integrates the information from the retina into color information. For example, if we look at a purple van, our red and blue photoreceptor cells are activated. But no matter how close we get to the van, we never see separate blue or red color elements. What we see is pre-processed for us through the combination of different sources of sensory information into new data, the color purple.

.jpg)

More complex processing involves the inclusion of the three-dimensional data the LGN has derived from sense data. It is used to further modify the way the LGN integrates color perception. Consider the checkers shadow illusion in Figure 5.15: while in the first picture it looks like a checkerboard with a three-dimensional black ball throwing its shade over the board, the second picture shows how both marked squares were printed (or are displayed) with the identical shade of grey. Our brain processes the image to give us the impression of how the checkerboard would look without the shadow. Without this processing, it would look like the shadow was actually printed on the checkerboard.

.jpg)

The Brain as a Prediction Machine

Processing visual and auditory data requires time. Studies have shown that the brain needs around 190ms for visual stimuli and 160ms for auditory stimuli [Welford, 1980]. This poses a significant problem: any decision the brain makes is calculated based on old information. A delay of 190ms does not sound like very much. But imagine a ball that is thrown at you with a speed of 100km per hour (or about 15 meters per second). In 190ms, the ball travels a distance of around five meters. With that delay, you would always catch the ball too late. This problem becomes even more complicated if you are moving. To deal with this delay, the brain tries to predict the positions of where objects will be in the near future based on their current speed. This helps other parts of the brain to make more accurate decisions, for example, catching the ball in the right moment.

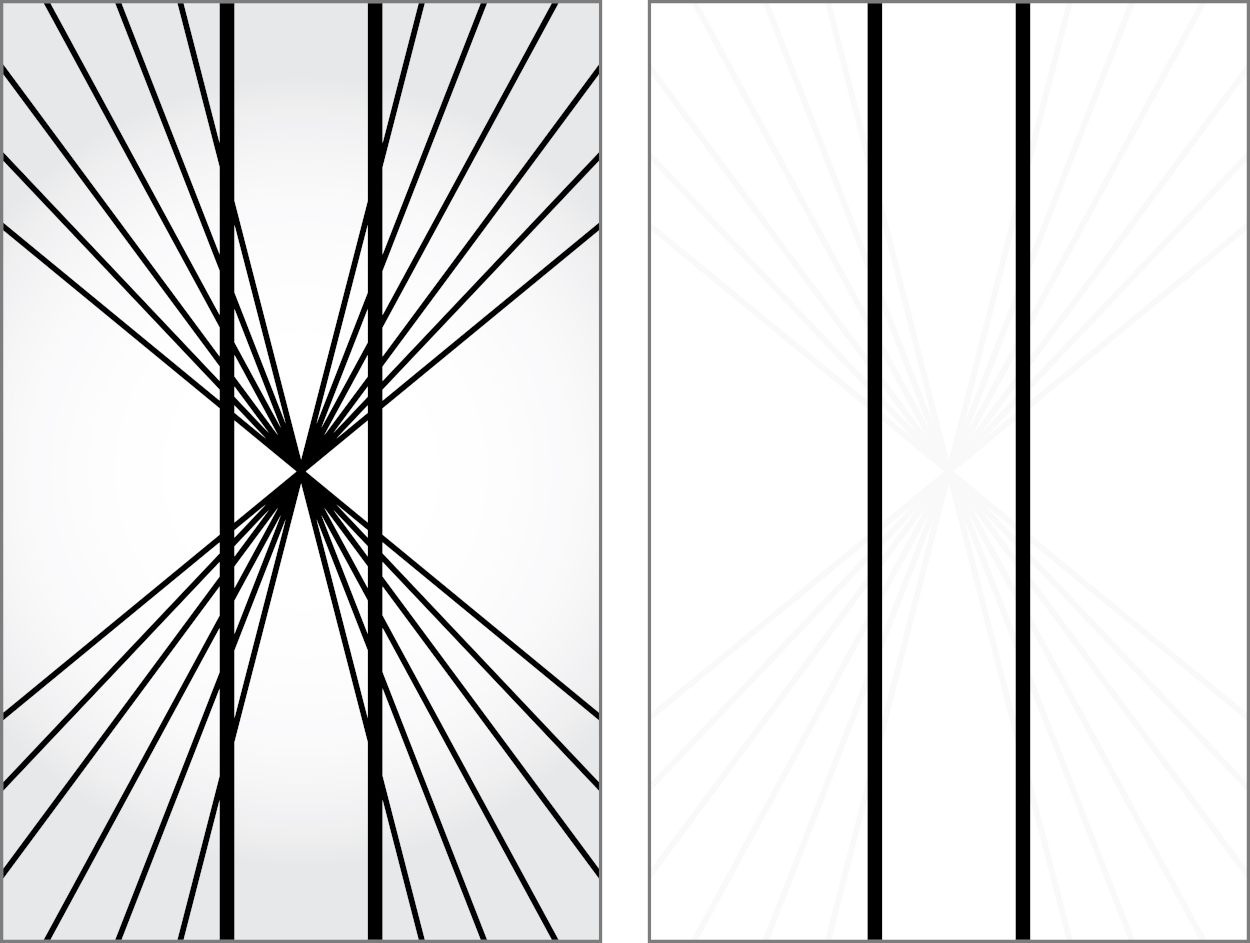

There are a few instances where this prediction process of the brain fails noticeably. Consider the Hering illusion in Figure 5.16: the straight lines near the central point appear to curve outward. Our visual system tries to predict the way the underlying scene would look in the next instant if we were moving toward its center. The cost of predicting the future to reduce reaction times is that we sometimes experience this correction as an optical illusion [Changizi et al., 2008].

While we can establish that optical illusions are the product of pre-processing and ultimately useful for us, the question is why there is a difference between how we experience what we see and what is actually there. One could argue that it is just an optimization or filter by the brain for specialized applications (movement, 3D, faces, and so on). But this does not explain why this optimization feels real to us, even when we have evidence to the contrary.

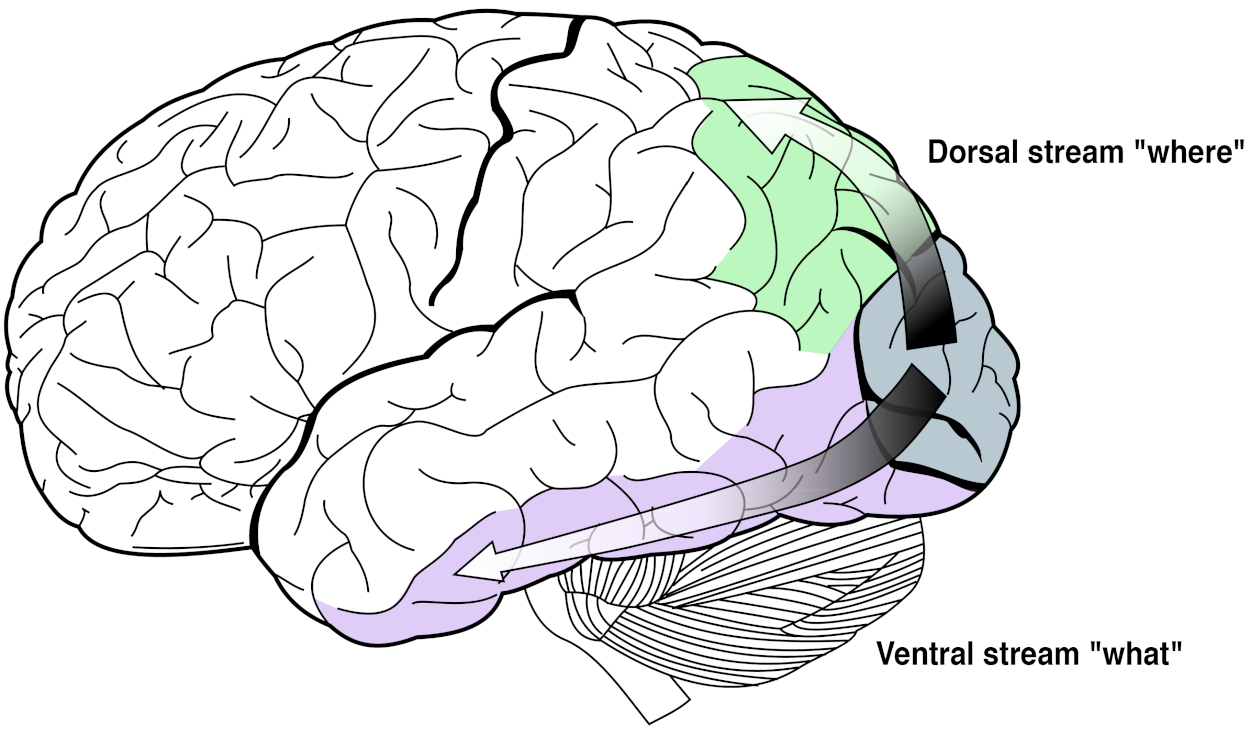

The Ventral and Dorsal Streams

The output from the visual cortex continues as two separate data streams:

- The ventral (lower) stream (the what) through the temporal lobe (see Figure 5.17).; and

- The dorsal (upper) stream (the where and how) through the parietal lobe.

The temporal lobe processes visual information to categorize what things are, while the parietal lobe integrates the spatial information into a map of where and how things are. For example, looking at an apple on a table, the temporal lobe identifies that there is an apple and a table, and the parietal lobe identifies that the apple is on top of the table.

Temporal lobe The temporal lobe is the part of the neocortex that deals with the “what”: long-term memory, and object, face, and speech recognition.

Parietal lobe The parietal lobe is the part of the neocortex that deals with the “where,” especially the location of entities, the “how,” as well as touch perception.

Compared to the dorsal stream, the ventral stream is processed more quickly. It is more important to know what you see (a tiger, a fire, an acquaintance, etc.) than where it is. This idea of prioritization of the processing of the sense data can also be found in the general architecture of the visual system.

One could ask why our visual cortex is at the very back of our brain given that it takes valuable time for a signal to pass through the brain. Why not have all visual processing at the front or at least directly behind the eyes? Given the actual architecture, processing in the visual cortex seems to have a low priority. It is furthest away from the eyes—the opposite of what we would expect from an organ that can require an immediate response.

Looking at it from an engineering point of view and turning the question around, we would ask: what essential functions of the visual system should be put at the front? That is, reflexes to close your eyelids to protect your eyes, to combine information from your left and right eye, to turn your head to a source of movement or sound, to focus your lenses, and to constrict your pupils to protect your retina. All brain parts responsible for these abilities are positioned around the superior colliculi near the eyes. They provide us with reflexes and mechanisms to protect the eyes and refocus them.

After the initial processing in the superior colliculi, the processing goes through the LGN. There, a three-dimensional representation of the world is created, with just enough detail to allow for quick—possibly life-saving—reactions. For example, in a ball game, if we always had to first conceptualize that a ball is flying at us and plan for its arrival, our reactions would be very slow. Learning to catch a ball requires us to bypass conceptualization and just “do”—trusting our instincts supported by early calculations in the LGN before the information even reaches our visual cortex.

The Temporal Lobes

The temporal lobes of the cerebrum are positioned on the left and right side of the brain, near the ears. They process auditory signals and are responsible for identifying what is being said (or seen, as part of the ventral stream). Not only does this brain part identify what you see, but it also maps it to language (in Wernicke’s area of the left temporal lobe). If Wernicke’s area is damaged, you would use individual words correctly, but in combination, the words may not make any sense.

Wernicke’s area Wernicke’s area is located in the left side of the temporal lobe. Its function is the comprehension of speech. Damage to Wernicke’s area leads to people losing the ability to form meaningful sentences. It is connected to Broca’s area, which is responsible for muscle activation to produce speech.

The brain part in the right hemisphere corresponding (homologous) to Wernicke’s area deals with subordinate meanings of ambiguous words (for example, “bank” refers to a financial institution but could also refer to a river bank, Harpaz et al. [2009]). With the ventral stream, you can identify that you are seeing a tree and connect it to the abstract concept of a tree. It also allows you to map the concept of a tree to the image of a tree or to the sound of the word “tree.” As we have learned in Philosophy for Heroes: Knowledge, this mapping is ultimately connected to a past experience. In terms of learning languages, we connect a word with the experiences we had when hearing or reading the word in the past. Someone pointed to a tree, said “tree,” and we connected sound and image with the concept of a tree. As this suggests, the temporal lobe is essential to processing memories. This is supported by the fact that the temporal lobe is also connected to the hippocampus, providing spatial memory and short-term memory, as well as helping to create long-term memories.

One could argue that a comic strip is the brain’s internal representation of what is left after the brain has processed an image—just like the word “tree” is a representation of a real tree. To conceptualize the environment, the brain tries to strip all superfluous information from an image [Morgan et al., 2019]. For example, Figure 5.18 shows a simplified drawing of a kitchen. We can immediately recognize it as a kitchen. Abstract symbols that are stripped of all superfluous information (colors, textures, etc.) are even easier (especially with less ambiguity) to recognize, hence their use in street signs.

Facial Recognition

Given that we can remember thousands of faces despite them differing only minimally, it is no surprise that we have specialized mental machinery specifically for faces or face-like structures. The temporal lobe contains the fusiform face area that deals only with identifying faces. People who lack this ability of the brain to pre-process faces have prosopagnosia (“face-blindness”). Imagine that everyone you meet is wearing a mask: you would have to remember what clothes someone usually wore, how her voice sounded, and categorize people by their hairstyle, height, or body type. Similarly, if we have not encountered enough people from a particular background (Asian, African, European) to have learned to distinguish her facial features, we might have difficulty recognizing individual differences.

Our face recognition is so important that it goes as far as seeing faces where there are none. For example, a standard American power outlet is just that, a power outlet (see Figure 5.19). We are “projecting” that it is a surprised face, although we are absolutely sure that there is certainly not a (human) face in the wall. It seems that we share this ability to recognize basic facial features (two circles and a mouth) at least with reptiles, going back more than 300 million years in our evolutionary history [Versace et al., 2020]. Looking for faces everywhere can help us to quickly recognize a friend (or enemy)—at the low cost of identifying faces when there are none.

.jpg)

The downside of this pre-processing is that we can have a harder time focusing on details of a (known) face. The “Thatcher effect” is a demonstration of this. Looking at Figure 5.20, you can see a young woman’s face with a neutral expression. But turn this book upside down, and you will see a woman with a creepy grimace. This is because your ability to recognize faces is optimized to recognize people standing on their feet rather than hanging upside down from a tree. For the neural networks our brain uses, it would take extra effort to check whether or not mouths and eyes are oriented in the right direction. People with prosopagnosia are affected by the Thatcher effect, too, as their only struggle is with the classification of the face as a whole. They still use the same machinery as people without prosopagnosia to classify individual facial features (like the eyes or mouth). It is like the opposite of not being able to see the forest for the trees: we get the meaning of something (“this is a face of a young woman”) but miss the details (“her eyes are upside down”).

The extrastriate body area and the fusiform body area are similar to the fusiform face area. They are located in the visual cortex near the fusiform face area and deal with recognizing body parts and body shapes, and analyzing the relationship of moving limbs. Weaker connection between the extrastriate body area and the fusiform body area can lead to misjudgements of one’s own body size and to illnesses like anorexia nervosa [Suchan et al., 2013].

Delusions

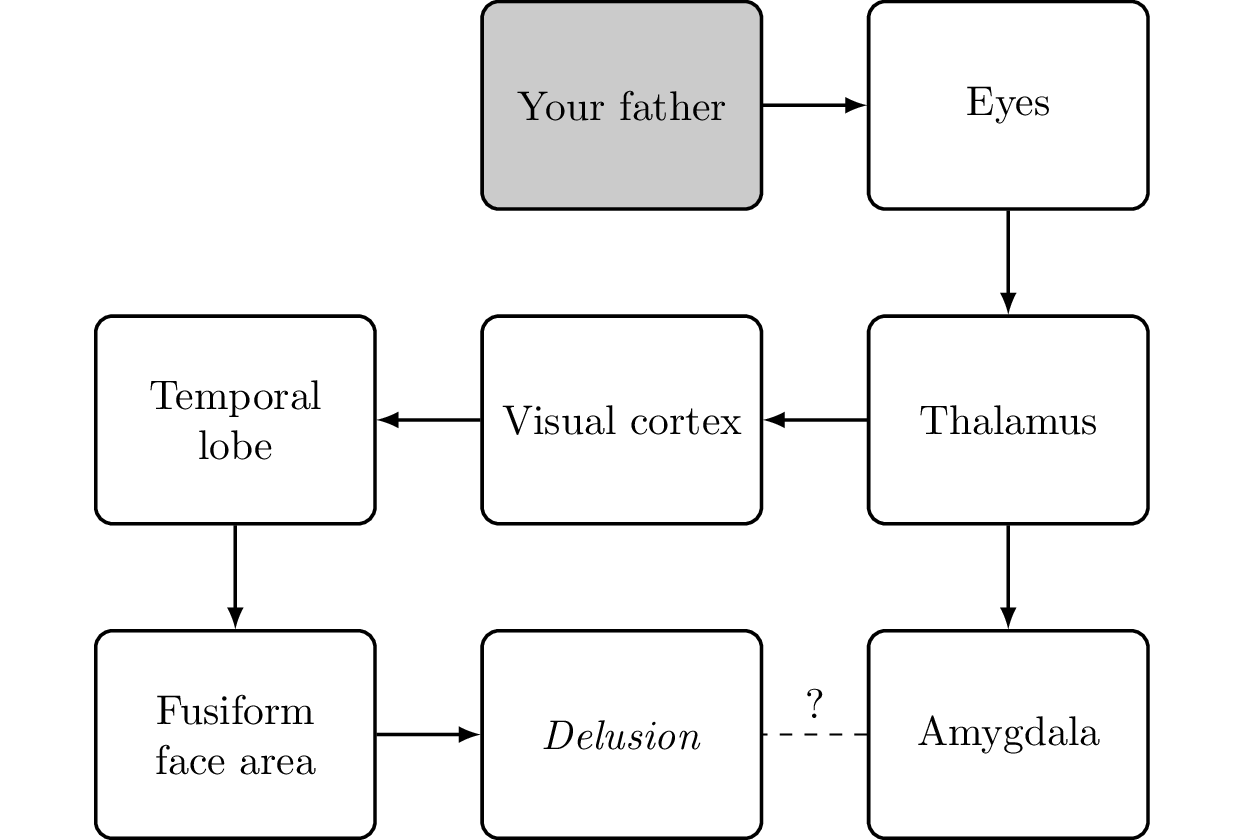

Faces are evaluated not just in the temporal lobe but also in the amygdala. If the fusiform face area was not working properly, we would still experience an emotion but would not recognize the face. If there is a problem with the connection between the thalamus and the amygdala, we might recognize a face but would lack the emotional response to the face (see Figure 5.21). This can lead to a monothematic delusion, namely the Capgras delusion [Ramachandran, 1998].

Monothematic delusion A monothematic delusion is a delusion focused on a single topic. A delusion is a firm belief that cannot be swayed by rational arguments. It is distinct from false beliefs that are based on false or incomplete information, erroneous logical conclusions, or perceptual problems.

Capgras delusion A person suffering from Capgras delusion can recognize people who are close to him, but he thinks they have been replaced by clones or doppelgangers. One cause for this condition is a damaged or missing connection from the amygdala that leads to a person having no emotional connection to those whom he sees.

The Capgras delusion is the belief that a person emotionally close to you (for example, your father) has been replaced by an imposter. You definitely see that the person is exactly as you have him in your memory; it is just that you no longer feel an emotional connection to him. For the brain, the most apparent (although weird) solution to solve this conflict is to assume that someone is impersonating your loved one. You cannot give clear reasoning for it, but based on the facts (the sense data identifying the person plus the lack of an emotional connection), it is the most logical conclusion. This can also happen in healthy people with movie actors. Having built an emotional connection to the character a person plays, there is a disconnect when meeting the actor in person: the actor looks exactly like the character but the emotional connection to the actor is missing. The best explanation the brain might come up with is that the person whom you are seeing (and who is the actor) is an imposter. This is more probable when meeting the actor outside the usual environment (convention, conferences, movie award events, etc.) in daily life (at the grocery store).

Other noteworthy delusions are:

- Fregoli delusion (all the people you meet are actually the same person in disguise);

- Syndrome of subjective doubles (there is a doppelganger of yourself acting in your name);

- Cotard delusion (you are dead or do not exist);

- Mirrored-self misidentification (the person in the mirror is someone else); and

- Reduplicative paramnesia (a place or object has been duplicated, like the belief that the hospital to which a person was admitted is a replica of an actual hospital somewhere else).

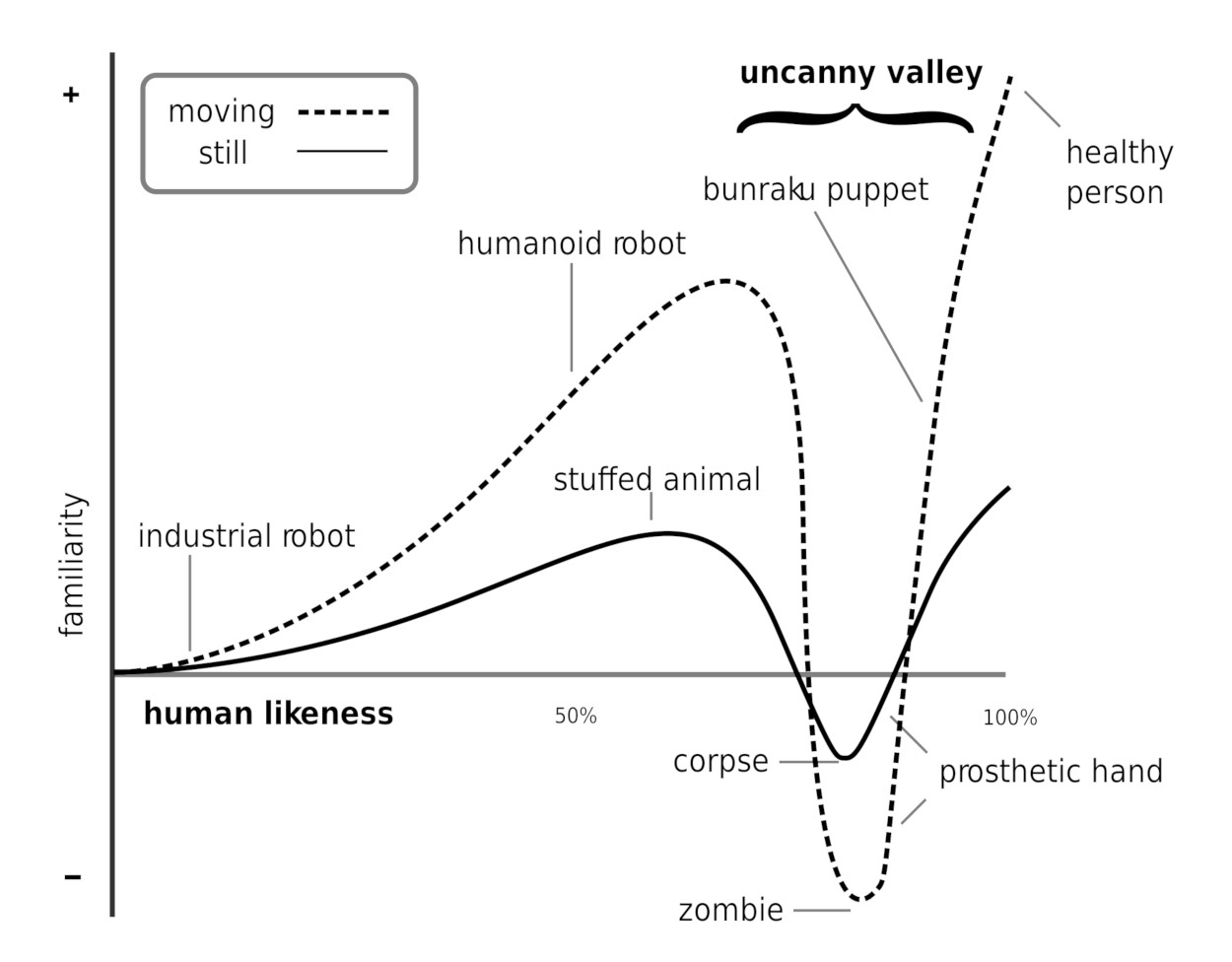

Somewhat related to delusions is the “uncanny valley effect” [Rosenthal-von der Putten et al., 2019]. We have no problem dealing with dolls. However, when they become too human-like (but not fully human-like), we experience feelings of eeriness and revulsion (see Figure 5.22). This is why zombies (and to an extent, human-like robots) are used in horror movies. Even modern computer graphics artists have serious problems creating believable faces. We can notice the smallest deviation from reality even if the face geometry fully matches the human face. The artist has to hit all the marks when it comes to lighting, mouth movements, nose shadows, skin pore structure, and so on. This is of no surprise as research points to face recognition being highly evolved as it is used also to detect kinship [Kaminski et al., 2009] which is an evolutionary advantage in taking care of relatives as well as for mate selection.

Uncanny valley effect The uncanny valley effect refers to the negative reaction to dolls or robots that are very (but not fully) human-like. Possible reasons for this reaction are an inbuilt instinct to avoid corpses, and the inner conflict and discomfort of switching back and forth between seeing a being as fully human or a lifeless object.

The uncanny valley effect is similar to the Capgras delusion in regard to there being two pieces of conflicting information in the brain. One pathway tells you “This is a human!” while another warns “No, that is no human,” and the brain has to sort it out somehow, leaving you with a strange feeling of uncertainty. Some of us might have experienced the sudden shock at night when we see a person standing in our living room, only to discover that it is just the clothes rack. The physical presence of a person implies relationships, conflicts, alliances, and enemies. Humans can be more dangerous than the wildest animal, hence anything that resembles a human gets the most immediate attention. In addition, some of the discomfort could also stem from pathogen avoidance (a nearly human-like robot could also be seen as a real human with a serious illness or even as a corpse, see Moosa and Ud-Dean [2010]).